Robotic arms are used today for assembly, packaging, inspection, and many more applications. However, they are still preprogrammed to perform specific and often repetitive tasks. To meet the increasing need for adaptability in most environments, perceptive arms are needed to make decisions and adjust behavior based on real-time data. This leads to more flexibility across tasks in collaborative environments, and improves safety through hazard awareness.

This edition of NVIDIA Robotics Research and Development Digest (R2D2) explores several robot dexterity, manipulation, and grasping workflows and AI models from NVIDIA Research (listed below) and how they can address key robot challenges such as adaptability and data scarcity:

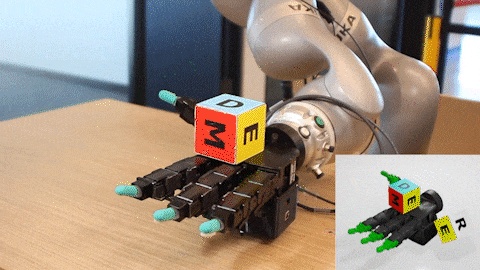

- DextrAH-RGB: A workflow for dexterous grasping from stereo RGB input.

- DexMimicGen: A data generation pipeline for bimanual dexterous manipulation using imitation learning (IL). Featured at ICRA 2025.

- GraspGen: A synthetic dataset of over 57 million grasps for different robots and grippers.

What are dexterous robots?

Dexterous robots manipulate objects with precision, adaptability, and efficiency. Robot dexterity involves fine motor control, coordination, and the ability to handle a wide range of tasks, often in unstructured environments. Key aspects of robot dexterity include grip, manipulation, tactile sensitivity, agility, and coordination.

Robot dexterity is crucial in industries like manufacturing, healthcare, and logistics enabling automation in tasks that traditionally require human-like precision.

NVIDIA robot dexterity and manipulation workflows and models

Dexterous grasping is a challenging task in robotics, requiring robots to manipulate a wide variety of objects with precision and speed. Traditional methods struggle with reflective objects and do not generalize well to new objects or dynamic environments.

NVIDIA Research addresses these challenges by developing end-to-end foundation models and workflows that enable robust manipulation across objects and environments.

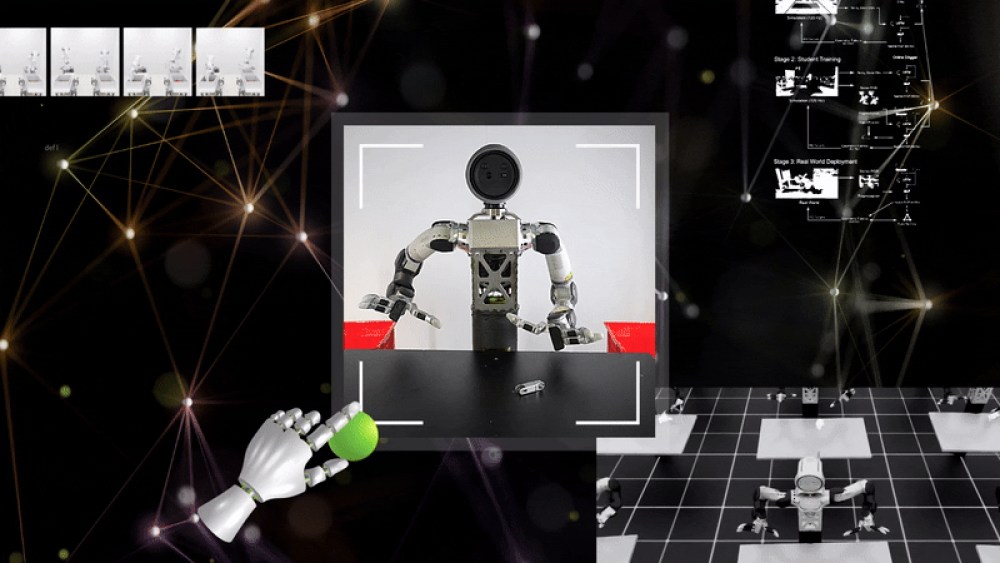

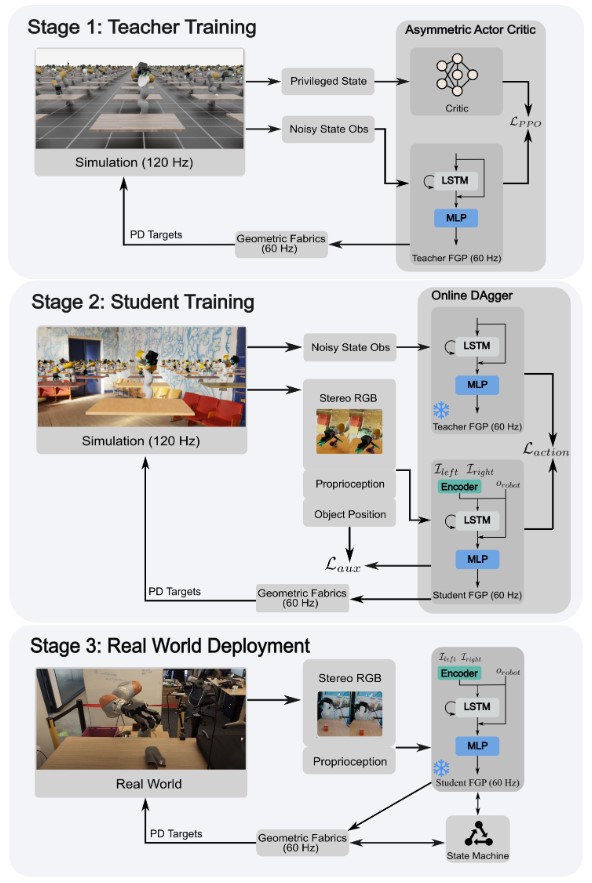

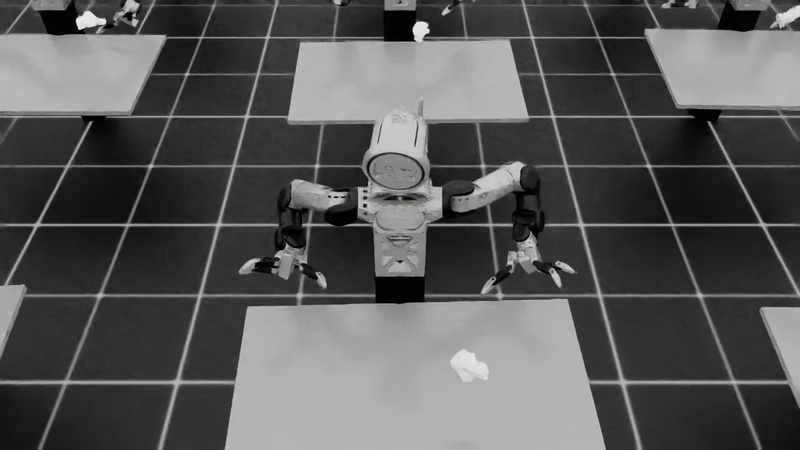

The training pipeline consists of two stages. First, a teacher policy is trained in simulation using reinforcement learning (RL). The teacher is a privileged fabric-guided policy (FGP) that acts on a geometric fabric action space. Geometric fabrics are a form of vectorized low-level control that defines motion as joint position, velocity, and acceleration signals that are transmitted as commands to a robot’s controller. This enables rapid iteration by ensuring safety and reactivity at deployment, by embedding collision avoidance and goal-reaching behaviors.

The teacher policy has an LSTM layer that reasons and adapts to the world’s physics. This helps incorporate corrective behaviors, like regrasping and grasp success understanding, to react to current dynamics. The first stage of training ensures robustness and adaptability by leveraging domain randomization. Physics and visual and perturbation parameters are varied to progressively increase environmental difficulty as the teacher policy is trained.

In the second stage of training, the teacher policy is distilled into an RGB-based student policy in simulation using photorealistic tiled rendering. This step uses an imitation learning framework called DAgger. The student policy receives RGB images from a stereo camera, enabling it to implicitly infer depth and object positions.

Simulation-to-real with Boston Dynamics Atlas MTS robot

NVIDIA and Boston Dynamics have been working together to train and deploy DextrAH-RGB. Figure 2 and Video 2 show a robotic system driven by a generalist policy with robust, zero-shot simulation-to-real grasping capabilities deployed on Atlas’ upper torso.

Robot dexterity is crucial in industries like manufacturing, healthcare, and logistics enabling automation in tasks that traditionally require human-like precision.

The system exhibits a variety of grasps powered by Atlas’ three fingered grippers, which can accommodate both lightweight and heavy objects, and shows emerging failure detection and retry behaviors.

DexMimicGen for bimanual manipulation data generation

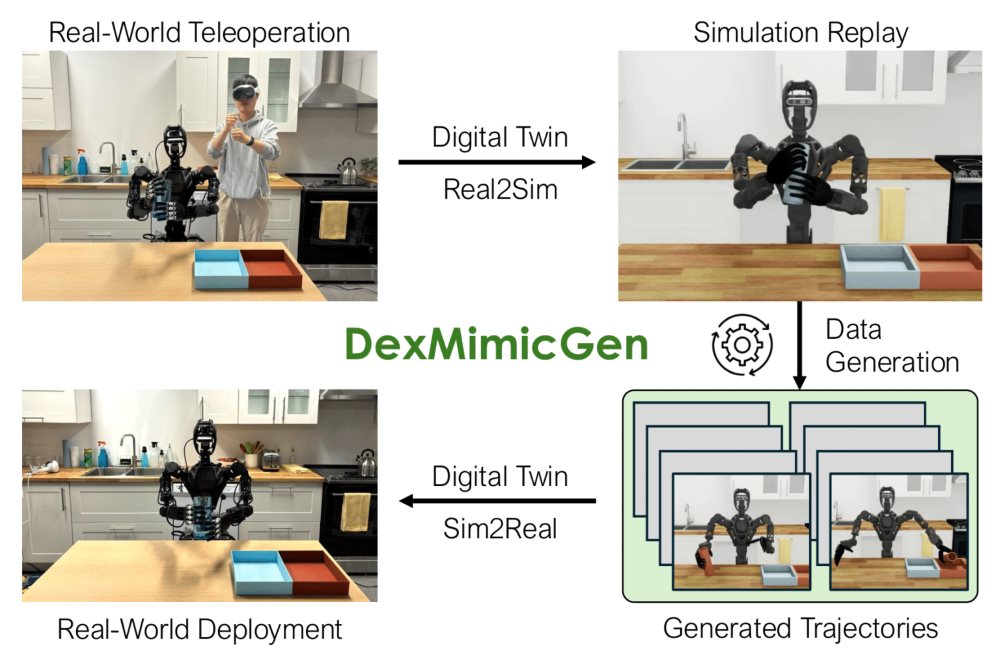

DexMimicGen is a workflow for bimanual manipulation data generation that uses a small number of human demonstrations to generate large-scale trajectory datasets. The aim is to reduce the tedious task of manual data collection by enabling robots to learn actions in simulation, which can be transferred to the real world. This workflow addresses the challenge of data scarcity in IL for bimanual dexterous robots like humanoids.

DexMimicGen uses simulation-based augmentation for generating datasets. First, a human demonstrator collects a handful of demonstrations using a teleoperation device. DexMimicGen then generates a large dataset of demonstration trajectories in simulation. For example, in the initial publication, researchers generated 21K demos from just 60 human demos using DexMimicGen. Finally, a policy is trained on the generated dataset using IL to perform a manipulation task and deployed to a physical robot.

Bimanual manipulation is challenging due to the need for precise coordination between two arms across different tasks. Parallel tasks like picking up a different object in each arm, require independent control policies. Coordinated tasks, like lifting a large object, require arms to synchronize motion and timing. Sequential tasks require subtasks to be completed in a certain order, like moving a box with one hand and putting an object in it with the other.

DexMimicGen accounts for these varying requirements during data generation using a ‘parallel, coordination, and sequential’ taxonomy of subtasks. This uses asynchronous execution strategies for independent arm subtasks, synchronization mechanisms for coordination tasks, and ordering constraints for sequential subtasks. This method ensures precise alignment and logical task execution during data generation.

When deployed in the real world, DexMimicGen enabled a humanoid to achieve a 90% success rate in a can sorting task using data generated through its real-to-sim-to-real pipeline. For comparison, when trained on human demonstrations alone, the model achieved 0% success. These observations highlight DexMimicGen’s effectiveness in reducing human effort while enabling robust robot learning for complex manipulation tasks.

GraspGen dataset for multiple robots and grippers

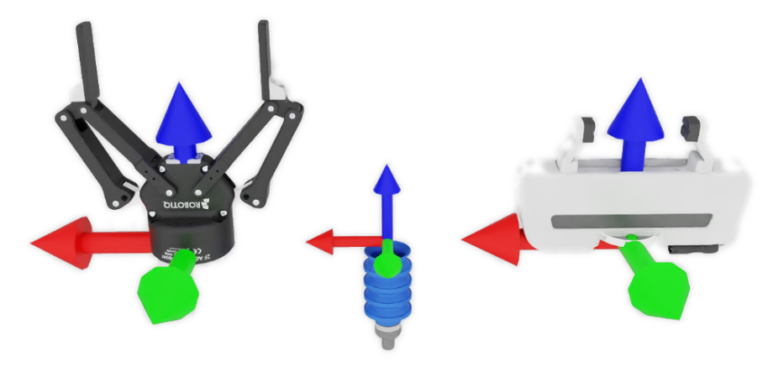

To support research, GraspGen provides a new simulated dataset on Hugging Face of 57 million grasps for three different grippers. The dataset includes 6D gripper transformations and success labels for different object meshes.

The three grippers are the Franka Panda gripper, the Robotiq 2F-140 industrial gripper, and a single-contact suction gripper. GraspGen was entirely generated in simulation, demonstrating the benefits of automatic data generation to scale datasets in size and diversity.